运行 MapReduce 样例_hadoop-mapreduce-examples-*.jar-程序员宅基地

[root@master hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar

An example program must be given as the first argument.

Valid program names are:

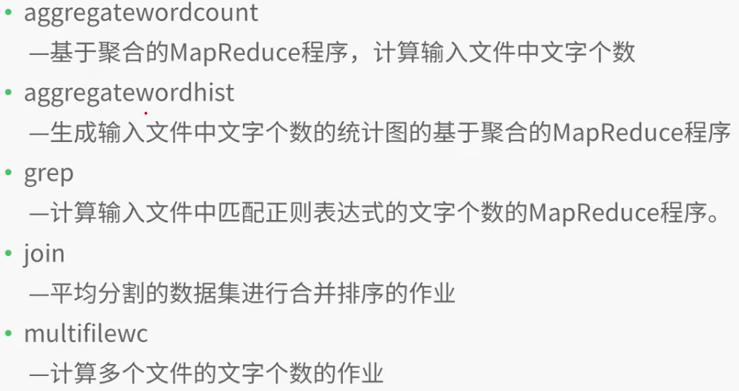

aggregatewordcount: An Aggregate based map/reduce program that counts the words in the input files.

aggregatewordhist: An Aggregate based map/reduce program that computes the histogram of the words in the input files.

bbp: A map/reduce program that uses Bailey-Borwein-Plouffe to compute exact digits of Pi.

dbcount: An example job that count the pageview counts from a database.

distbbp: A map/reduce program that uses a BBP-type formula to compute exact bits of Pi.

grep: A map/reduce program that counts the matches of a regex in the input.

join: A job that effects a join over sorted, equally partitioned datasets

multifilewc: A job that counts words from several files.

pentomino: A map/reduce tile laying program to find solutions to pentomino problems.

pi: A map/reduce program that estimates Pi using a quasi-Monte Carlo method.

randomtextwriter: A map/reduce program that writes 10GB of random textual data per node.

randomwriter: A map/reduce program that writes 10GB of random data per node.

secondarysort: An example defining a secondary sort to the reduce.

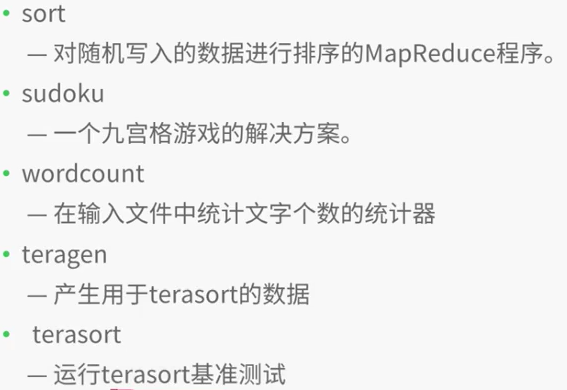

sort: A map/reduce program that sorts the data written by the random writer.

sudoku: A sudoku solver.

teragen: Generate data for the terasort

terasort: Run the terasort

teravalidate: Checking results of terasort

wordcount: A map/reduce program that counts the words in the input files.

wordmean: A map/reduce program that counts the average length of the words in the input files.

wordmedian: A map/reduce program that counts the median length of the words in the input files.

wordstandarddeviation: A map/reduce program that counts the standard deviation of the length of the words in the input files.

[root@master hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar wordcount

Usage: wordcount <in> [<in>...] <out>

[root@master hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar pi

Usage: org.apache.hadoop.examples.QuasiMonteCarlo <nMaps> <nSamples>

Generic options supported are

-conf <configuration file> specify an application configuration file

-D <property=value> use value for given property

-fs <local|namenode:port> specify a namenode

-jt <local|resourcemanager:port> specify a ResourceManager

-files <comma separated list of files> specify comma separated files to be copied to the map reduce cluster

-libjars <comma separated list of jars> specify comma separated jar files to include in the classpath.

-archives <comma separated list of archives> specify comma separated archives to be unarchived on the compute machines.

The general command line syntax is

bin/hadoop command [genericOptions] [commandOptions][root@master hadoop-2.7.4]# jps

4912 NameNode

9265 NodeManager

9155 ResourceManager

9561 Jps

5195 SecondaryNameNode

5038 DataNode

[root@master hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar wordcount /input /output2

17/12/17 16:28:33 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

17/12/17 16:28:35 INFO input.FileInputFormat: Total input paths to process : 1

17/12/17 16:28:35 INFO mapreduce.JobSubmitter: number of splits:1

17/12/17 16:28:35 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1513499297109_0001

17/12/17 16:28:36 INFO impl.YarnClientImpl: Submitted application application_1513499297109_0001

17/12/17 16:28:37 INFO mapreduce.Job: The url to track the job: http://centos:8088/proxy/application_1513499297109_0001/

17/12/17 16:28:37 INFO mapreduce.Job: Running job: job_1513499297109_0001

17/12/17 16:29:06 INFO mapreduce.Job: Job job_1513499297109_0001 running in uber mode : false

17/12/17 16:29:06 INFO mapreduce.Job: map 0% reduce 0%

17/12/17 16:29:25 INFO mapreduce.Job: map 100% reduce 0%

17/12/17 16:29:40 INFO mapreduce.Job: map 100% reduce 100%

17/12/17 16:29:41 INFO mapreduce.Job: Job job_1513499297109_0001 completed successfully

17/12/17 16:29:42 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=339

FILE: Number of bytes written=242217

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=267

HDFS: Number of bytes written=217

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=16910

Total time spent by all reduces in occupied slots (ms)=9673

Total time spent by all map tasks (ms)=16910

Total time spent by all reduce tasks (ms)=9673

Total vcore-milliseconds taken by all map tasks=16910

Total vcore-milliseconds taken by all reduce tasks=9673

Total megabyte-milliseconds taken by all map tasks=17315840

Total megabyte-milliseconds taken by all reduce tasks=9905152

Map-Reduce Framework

Map input records=4

Map output records=31

Map output bytes=295

Map output materialized bytes=339

Input split bytes=95

Combine input records=31

Combine output records=29

Reduce input groups=29

Reduce shuffle bytes=339

Reduce input records=29

Reduce output records=29

Spilled Records=58

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=166

CPU time spent (ms)=1380

Physical memory (bytes) snapshot=279044096

Virtual memory (bytes) snapshot=4160716800

Total committed heap usage (bytes)=138969088

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=172

File Output Format Counters

Bytes Written=217

[root@master hadoop-2.7.4]# ./bin/hdfs dfs -ls /output2/

Found 2 items

-rw-r--r-- 1 root supergroup 0 2017-12-17 16:29 /output2/_SUCCESS

-rw-r--r-- 1 root supergroup 217 2017-12-17 16:29 /output2/part-r-00000

[root@master hadoop-2.7.4]# ./bin/hdfs dfs -cat /output2/part-r-00000

78 1

ai 1

daokc 1

dfksdhlsd 1

dkhgf 1

docke 1

docker 1

erhejd 1

fdjk 1

fdskre 1

fjdk 1

fjdks 1

fjksl 1

fsd 1

go 1

haddop 1

hello 3

hi 1

hki 1

jfdk 1

scalw 1

sd 1

sdkf 1

sdkfj 1

sdl 1

sstem 1

woekd 1

yfdskt 1

yuihej 1

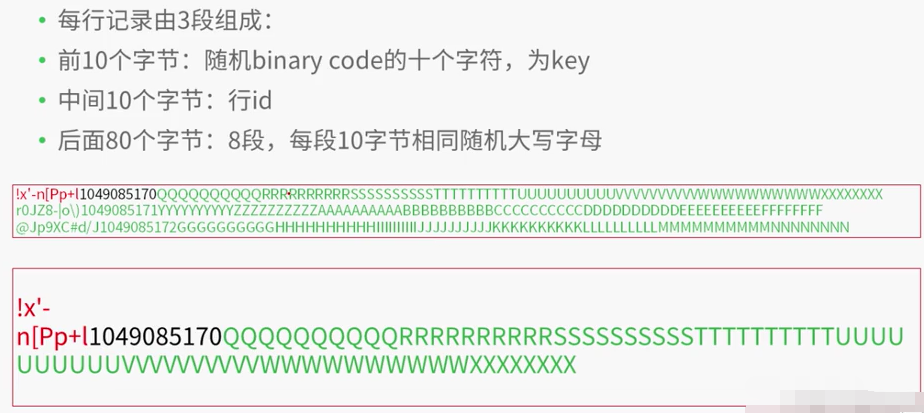

[root@master hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar teragen

teragen <num rows> <output dir>

[root@master hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar teragen 10000 /teragen

17/12/17 16:36:48 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

17/12/17 16:36:49 INFO terasort.TeraSort: Generating 10000 using 2

17/12/17 16:36:50 INFO mapreduce.JobSubmitter: number of splits:2

17/12/17 16:36:50 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1513499297109_0002

17/12/17 16:36:50 INFO impl.YarnClientImpl: Submitted application application_1513499297109_0002

17/12/17 16:36:50 INFO mapreduce.Job: The url to track the job: http://centos:8088/proxy/application_1513499297109_0002/

17/12/17 16:36:50 INFO mapreduce.Job: Running job: job_1513499297109_0002

17/12/17 16:37:01 INFO mapreduce.Job: Job job_1513499297109_0002 running in uber mode : false

17/12/17 16:37:01 INFO mapreduce.Job: map 0% reduce 0%

17/12/17 16:37:19 INFO mapreduce.Job: map 100% reduce 0%

17/12/17 16:37:21 INFO mapreduce.Job: Job job_1513499297109_0002 completed successfully

17/12/17 16:37:21 INFO mapreduce.Job: Counters: 31

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=240922

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=164

HDFS: Number of bytes written=1000000

HDFS: Number of read operations=8

HDFS: Number of large read operations=0

HDFS: Number of write operations=4

Job Counters

Launched map tasks=2

Other local map tasks=2

Total time spent by all maps in occupied slots (ms)=30146

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=30146

Total vcore-milliseconds taken by all map tasks=30146

Total megabyte-milliseconds taken by all map tasks=30869504

Map-Reduce Framework

Map input records=10000

Map output records=10000

Input split bytes=164

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=434

CPU time spent (ms)=1400

Physical memory (bytes) snapshot=161800192

Virtual memory (bytes) snapshot=4156805120

Total committed heap usage (bytes)=35074048

org.apache.hadoop.examples.terasort.TeraGen$Counters

CHECKSUM=21555350172850

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=1000000

[root@master hadoop-2.7.4]# ./bin/hdfs dfs -ls /teragen

Found 3 items

-rw-r--r-- 1 root supergroup 0 2017-12-17 16:37 /teragen/_SUCCESS

-rw-r--r-- 1 root supergroup 500000 2017-12-17 16:37 /teragen/part-m-00000

-rw-r--r-- 1 root supergroup 500000 2017-12-17 16:37 /teragen/part-m-00001[root@centos hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar terasort /teragen /terasort

17/12/17 16:46:24 INFO terasort.TeraSort: starting

17/12/17 16:46:25 INFO input.FileInputFormat: Total input paths to process : 2

Spent 135ms computing base-splits.

Spent 3ms computing TeraScheduler splits.

Computing input splits took 139ms

Sampling 2 splits of 2

Making 1 from 10000 sampled records

Computing parititions took 384ms

Spent 530ms computing partitions.

17/12/17 16:46:26 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

17/12/17 16:46:27 INFO mapreduce.JobSubmitter: number of splits:2

17/12/17 16:46:27 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1513499297109_0003

17/12/17 16:46:28 INFO impl.YarnClientImpl: Submitted application application_1513499297109_0003

17/12/17 16:46:28 INFO mapreduce.Job: The url to track the job: http://centos:8088/proxy/application_1513499297109_0003/

17/12/17 16:46:28 INFO mapreduce.Job: Running job: job_1513499297109_0003

17/12/17 16:46:38 INFO mapreduce.Job: Job job_1513499297109_0003 running in uber mode : false

17/12/17 16:46:38 INFO mapreduce.Job: map 0% reduce 0%

17/12/17 16:47:19 INFO mapreduce.Job: map 100% reduce 0%

17/12/17 16:47:41 INFO mapreduce.Job: map 100% reduce 100%

17/12/17 16:47:44 INFO mapreduce.Job: Job job_1513499297109_0003 completed successfully

17/12/17 16:47:45 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=1040006

FILE: Number of bytes written=2445488

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=1000208

HDFS: Number of bytes written=1000000

HDFS: Number of read operations=9

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=2

Launched reduce tasks=1

Data-local map tasks=2

Total time spent by all maps in occupied slots (ms)=87622

Total time spent by all reduces in occupied slots (ms)=12795

Total time spent by all map tasks (ms)=87622

Total time spent by all reduce tasks (ms)=12795

Total vcore-milliseconds taken by all map tasks=87622

Total vcore-milliseconds taken by all reduce tasks=12795

Total megabyte-milliseconds taken by all map tasks=89724928

Total megabyte-milliseconds taken by all reduce tasks=13102080

Map-Reduce Framework

Map input records=10000

Map output records=10000

Map output bytes=1020000

Map output materialized bytes=1040012

Input split bytes=208

Combine input records=0

Combine output records=0

Reduce input groups=10000

Reduce shuffle bytes=1040012

Reduce input records=10000

Reduce output records=10000

Spilled Records=20000

Shuffled Maps =2

Failed Shuffles=0

Merged Map outputs=2

GC time elapsed (ms)=3246

CPU time spent (ms)=3580

Physical memory (bytes) snapshot=400408576

Virtual memory (bytes) snapshot=6236995584

Total committed heap usage (bytes)=262987776

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=1000000

File Output Format Counters

Bytes Written=1000000

17/12/17 16:47:45 INFO terasort.TeraSort: done

[root@centos hadoop-2.7.4]# ./bin/hdfs dfs -ls /terasort

Found 3 items

-rw-r--r-- 1 root supergroup 0 2017-12-17 16:47 /terasort/_SUCCESS

-rw-r--r-- 10 root supergroup 0 2017-12-17 16:46 /terasort/_partition.lst

-rw-r--r-- 1 root supergroup 1000000 2017-12-17 16:47 /terasort/part-r-00000[root@centos hadoop-2.7.4]# ./bin/yarn jar /opt/hadoop-2.7.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar teravalidate /terasort /report

17/12/17 17:03:46 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

17/12/17 17:03:48 INFO input.FileInputFormat: Total input paths to process : 1

Spent 56ms computing base-splits.

Spent 3ms computing TeraScheduler splits.

17/12/17 17:03:48 INFO mapreduce.JobSubmitter: number of splits:1

17/12/17 17:03:49 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1513499297109_0007

17/12/17 17:03:49 INFO impl.YarnClientImpl: Submitted application application_1513499297109_0007

17/12/17 17:03:49 INFO mapreduce.Job: The url to track the job: http://centos:8088/proxy/application_1513499297109_0007/

17/12/17 17:03:49 INFO mapreduce.Job: Running job: job_1513499297109_0007

17/12/17 17:04:00 INFO mapreduce.Job: Job job_1513499297109_0007 running in uber mode : false

17/12/17 17:04:00 INFO mapreduce.Job: map 0% reduce 0%

17/12/17 17:04:08 INFO mapreduce.Job: map 100% reduce 0%

17/12/17 17:04:19 INFO mapreduce.Job: map 100% reduce 100%

17/12/17 17:04:20 INFO mapreduce.Job: Job job_1513499297109_0007 completed successfully

17/12/17 17:04:20 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=92

FILE: Number of bytes written=241805

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=1000105

HDFS: Number of bytes written=22

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=4952

Total time spent by all reduces in occupied slots (ms)=8032

Total time spent by all map tasks (ms)=4952

Total time spent by all reduce tasks (ms)=8032

Total vcore-milliseconds taken by all map tasks=4952

Total vcore-milliseconds taken by all reduce tasks=8032

Total megabyte-milliseconds taken by all map tasks=5070848

Total megabyte-milliseconds taken by all reduce tasks=8224768

Map-Reduce Framework

Map input records=10000

Map output records=3

Map output bytes=80

Map output materialized bytes=92

Input split bytes=105

Combine input records=0

Combine output records=0

Reduce input groups=3

Reduce shuffle bytes=92

Reduce input records=3

Reduce output records=1

Spilled Records=6

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=193

CPU time spent (ms)=1250

Physical memory (bytes) snapshot=281731072

Virtual memory (bytes) snapshot=4160716800

Total committed heap usage (bytes)=139284480

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=1000000

File Output Format Counters

Bytes Written=22

[root@centos hadoop-2.7.4]# ./bin/hdfs dfs -ls /report

Found 2 items

-rw-r--r-- 1 root supergroup 0 2017-12-17 17:04 /report/_SUCCESS

-rw-r--r-- 1 root supergroup 22 2017-12-17 17:04 /report/part-r-00000

[root@centos hadoop-2.7.4]# ./bin/hdfs dfs -cat /report/part-r-00000

checksum 139abefd74b2

智能推荐

python中文显示不出来_解决Python词云库wordcloud不显示中文的问题-程序员宅基地

文章浏览阅读2.6k次。解决Python词云库wordcloud不显示中文的问题2018-11-25背景:wordcloud是基于Python开发的词云生成库,功能强大使用简单。github地址:https://github.com/amueller/word_cloudwordcloud默认是不支持显示中文的,中文会被显示成方框。安装:安装命令:pip install wordcloud解决:经过测试发现不支持显示中文..._词云python代码无法输出文字

JVM在线分析-解决问题的工具一(jinfo,jmap,jstack)_jmap 在线分析-程序员宅基地

文章浏览阅读807次。扩展。_jmap 在线分析

台式计算机cpu允许温度,玩游戏cpu温度多少正常(台式电脑夏季CPU一般温度多少)...-程序员宅基地

文章浏览阅读1.1w次。随着炎热夏季的到来,当玩游戏正爽的时候,电脑突然死机了,自动关机了,是不是有想给主机一脚的冲动呢?这个很大的原因是因为CPU温度过高导致的。很多新手玩家可能都有一个疑虑,cpu温度多少以下正常?有些说是60,有些说是70,到底多高CPU温度不会死机呢?首先我们先看看如何查看CPU的温度。下载鲁大师并安装,运行鲁大师软件,即可进入软件界面,并点击温度管理,即可看到电脑各个硬件的温度。鲁大师一般情况下..._台式机玩游戏温度多少正常

小白自学Python日记 Day2-打印打印打印!_puthon打印任务收获-程序员宅基地

文章浏览阅读243次。Day2-打印打印打印!我终于更新了!(哭腔)一、 最简单的打印最最简单的打印语句: print(“打印内容”)注意:python是全英的,符号记得是半角下面是我写的例子:然后进入power shell ,注意:你需要使用cd来进入你保存的例子的文件夹,保存时名字应该取为xxx.py我终于知道为什么文件夹取名都建议取英文了,因为进入的时候是真的很麻烦!如果你没有进入正确的文件夹..._puthon打印任务收获

Docker安装:Errors during downloading metadata for repository ‘appstream‘:_"cenerrors during download metadata for repository-程序员宅基地

文章浏览阅读1k次。centos8问题参考CentOS 8 EOL如何切换源? - 云服务器 ECS - 阿里云_"cenerrors during download metadata for repository \"appstream"

尚硅谷_谷粒学苑-微服务+全栈在线教育实战项目之旅_基于微服务的在线教育平台尚硅谷-程序员宅基地

文章浏览阅读2.7k次,点赞3次,收藏11次。SpringBoot+Maven+MabatisPlusmaven在新建springboot项目引入RELEASE版本出错maven在新建springboot项目引入RELEASE版本出错maven详解maven就是通过pom.xml中的配置,就能够从仓库获取到想要的jar包。仓库分为:本地仓库、第三方仓库(私服)、中央仓库springframework.boot:spring-boot-starter-parent:2.2.1.RELEASE’ not found若出现jar包下载不了只有两_基于微服务的在线教育平台尚硅谷

随便推点

网络学习第六天(路由器、VLAN)_路由和vlan-程序员宅基地

文章浏览阅读316次。路由的概念路由器它称之为网关设备。路由器就是用于连接不同网络的设备路由器是位于OSI模型的第三层。路由器通过路由决定数据的转发。网关的背景:当时每家计算机厂商,用于交换数据的通信程序(协议)和数据描述格式各不相同。因此,就把用于相互转换这些协议和格式的计算机称为网关。路由器与三层交换器的对比路由协议对比路由器的作用:1.路由寻址2.实现不同网络之间相连的功能3.通过路由决定数据的转发,转发策略称为 路由选择。VLAN相关技术什么是VLAN?中文名称叫:虚拟局域网。虚_路由和vlan

设置div背景颜色透明度,内部元素不透明_div设置透明度,里面的内容不透明-程序员宅基地

文章浏览阅读2.8w次,点赞6次,收藏22次。设置div背景颜色透明度,内部元素不透明:.demo{ background-color:rgba(255,255,255,0.15) } 错误方式:.demo{ background-color:#5CACEE;opacity:0.75;} 这样会导致div里面的元素内容和背景颜色一起变透明只针对谷歌浏览器的测试_div设置透明度,里面的内容不透明

Discuz!代码大全-程序员宅基地

文章浏览阅读563次。1.[ u]文字:在文字的位置可以任意加入您需要的字符,显示为下划线效果。2.[ align=center]文字:在文字的位置可以任意加入您需要的字符,center位置center表示居中,left表示居左,right表示居右。5.[ color=red]文字:输入您的颜色代码,在标签的中间插入文字可以实现文字颜色改变。6.[ SIZE=数字]文字:输入您的字体大小,在标签的中间插入文..._discuzcode 大全

iOS NSTimer定时器-程序员宅基地

文章浏览阅读2.6k次。iOS中定时器有三种,分别是NSTimer、CADisplayLink、dispatch_source,下面就分别对这三种计时器进行说明。一、NSTimerNSTimer这种定时器用的比较多,但是特别需要注意释放问题,如果处理不好很容易引起循环引用问题,造成内存泄漏。1.1 NSTimer的创建NSTimer有两种创建方法。方法一:这种方法虽然创建了NSTimer,但是定时器却没有起作用。这种方式创建的NSTimer,需要加入到NSRunLoop中,有NSRunLoop的驱动才会让定时器跑起来。_ios nstimer

Linux常用命令_ls-lmore-程序员宅基地

文章浏览阅读4.8k次,点赞17次,收藏51次。Linux的命令有几百个,对程序员来说,常用的并不多,考虑各位是初学者,先学习本章节前15个命令就可以了,其它的命令以后用到的时候再学习。1、开机 物理机服务器,按下电源开关,就像windows开机一样。 在VMware中点击“开启此虚拟机”。2、登录 启动完成后,输入用户名和密码,一般情况下,不要用root用户..._ls-lmore

MySQL基础命令_mysql -u user-程序员宅基地

文章浏览阅读4.1k次。1.登录MYSQL系统命令打开DOS命令框shengfen,以管理员的身份运行命令1:mysql -u usernae -p password命令2:mysql -u username -p password -h 需要连接的mysql主机名(localhost本地主机名)或是mysql的ip地址(默认为:127.0.0.1)-P 端口号(默认:3306端口)使用其中任意一个就OK,输入命令后DOS命令框得到mysql>就说明已经进入了mysql系统2. 查看mysql当中的._mysql -u user